We are live now: https://www.youtube.com/watch?v=9kbBmPbQ9zU

All posts by lisa

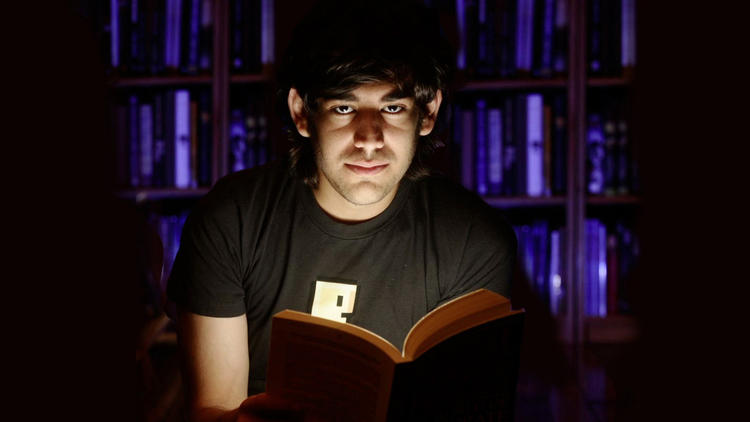

Today is the 11th Anniversary of Aaron’s Suicide

We are live now: https://www.youtube.com/watch?v=9kbBmPbQ9zU

Please Join us this Saturday, January 13th at 2pm PST for our first Aaron Swartz Podcast.

We will have Andre Vinicius Leal Sobral of Brazil’s Aaron Swartz Institute and Timid Robot from Creative Commons.

Today is the 11th Anniversary of Aaron Swartz’ suicide.

Aaron would have been 37 years old on November 8, 2023.

For more background about what happened to Aaron, please read this post from 2021.

It seems fitting to revisit this poem about Aaron, written by Brewster Kahle, shortly after Aaron’s death, in 2013. It was filmed in 2015, and just published for the first time in January 2021.

Howl for Aaron Swartz

Written by Brewster Kahle, shortly after Aaron’s Death, on January 11, 2013.

Howl for Aaron Swartz

New ways to create culture

Smashed by lawsuits and bullying

Laws that paint most of us criminal

Inspiring young leaders

Sharing everything

Living open source lives

Inspiring communities selflessly

Organizing, preserving

Sharing, promoting

Then crushed by government

Crushed by politicians, for a modest fee

Crushed by corporate spreadsheet outsourced business development

New ways

New communities

Then infiltrated, baited

Set-up, arrested

Celebrating public spaces

Learning, trying, exploring

Targeted by corporate security snipers

Ending up in databases

Ending up in prison

Traps set by those that promised change

Surveillance, wide-eyes, watching everyone now

Government surveillance that cannot be discussed or questioned

Corporate surveillance that is accepted with a click

Terrorists here, Terrorists there

More guns in schools to promote more guns, business

Rendition, torture

Manning, solitary, power

Open minds

Open source

Open eyes

Open society

Public access to the public domain

Now closed out of our devices

Closed out of owning books

Hands off

Do not open

Criminal prosecution

Traps designed by the silicon wizards

With remarkable abilities to self-justify

Traps sprung by a generation

That vowed not to repeat

COINTELPRO and dirty tricks and Democratic National Conventions

Government-produced malware so sophisticated

That career engineers go home each night thinking what?

Saying what to their families and friends?

Debt for school

Debt for houses

Debt for life

Credit scores, treadmills, with chains

Inspiring and optimistic explorers navigating a sea of traps set by us

I see traps ensnare our inspiring generation

Leaders and discoverers finding new ways and getting crushed for it

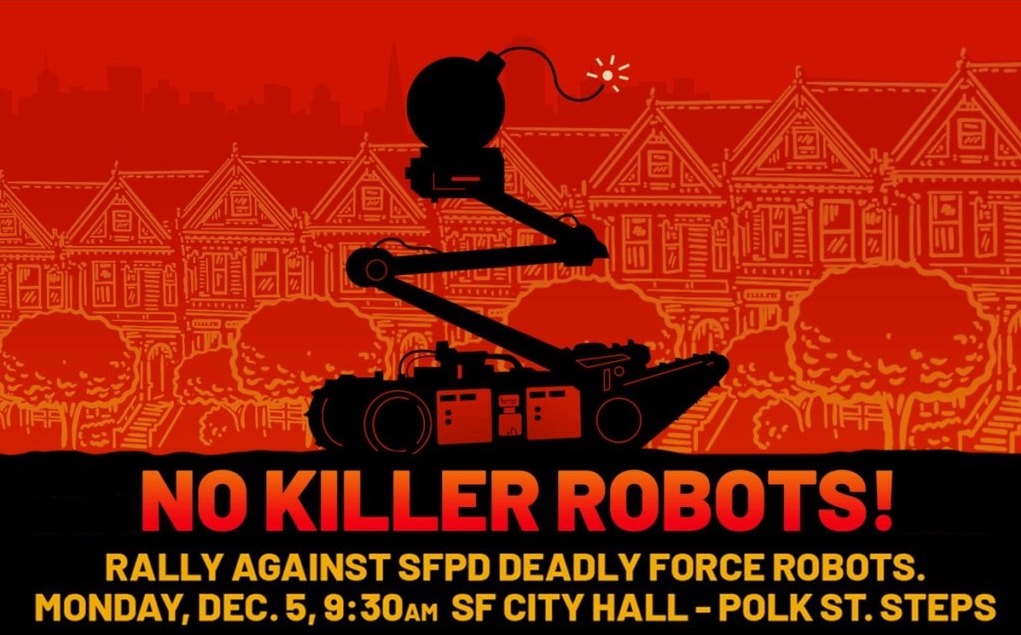

Transcription & Video/Audio of “No Killer Robots” Press Conference – December 5, 2022

UPDATE December 6th 3:35 PM:

Contact: Tracy Rosenberg, Co-Founder – Aaron Swartz Day Police Surveillance Project

email: tracy@media-alliance.org

phone: 510-684-6853

WE WON! The SF Board of Supervisors Voted 8-3 Banning SFPD from using Killer Robots!

The vote of 8-3 is to send the robot policy back to committee for further discussion and to have the current policy (unless and until a new policy comes back) be that robots with lethal force may not be used.

Thanks to everyone who worked together to make this happen!

SF No Killer Robots Press Conference December 5 2022

Video on you tube here: https://youtu.be/_B6ncBo5PZk

Download the video and audio recordings here (CC0 1.0 Universal – This recording is in the public domain.) (Recorded by the Aaron Swartz Day Police Surveillance Project.)

Video Download (720)(Starts at 3:22): https://drive.google.com/file/d/11XsJ0r4HzkuQWYhHrHqGdOxciP95BZUl/view?usp=sharing

Video Download (4K)(Starts at 3:22): https://drive.google.com/file/d/1EVjxDw0ywhLzUXUQu_s5S6cpREUEO84O/view?usp=sharing

Audio Download: https://drive.google.com/file/d/1nsH3RJsfQ0qzQWuvIwa4BZb0i7q3-_jK/view?usp=sharing

Background Info On This Event Here: https://www.aaronswartzday.org/no-killer-robots-rally/

00:00 – Melissa Hernandez

Hi everyone Hi, everyone, thank you so much for your patience, we’re gonna go ahead and get started. Okay, well, my name is Melissa Hernandez. I’m a legislative aide to supervisor Dean Preston. And I will be your emcee today. I’m going to keep this very short, because we have a lot of folks, a lot of folks who want to speak out against this. So I am going to go ahead and just dive into our speakers list. So our first speaker will be supervisor, Dean Preston.

00:57 – Dean Preston

Thank you all for being here. And a big shout out to my legislative aide Melissa Hernandez for all her work. And for emceeing us, I can’t believe in the City and County and San Francisco, that we’re even having to consider this issue. And it seems like a complete no brainer to me completely reckless that we would give police robots to kill people here in San Francisco, that is absolutely unacceptable. And we know, we know, that deadly force by the police department is disproportionately used against black and brown communities in San Francisco.

And this kind of technology would be no exception. So I want to thank my colleagues, President Walton, who you will hear from soon, supervisor Hillary Ronan for standing strong on this. And also, I want to thank all of the advocates who have been speaking out, and you’ll hear from today. And in particular, I want to thank the folks both at Electronic Frontier Foundation and the American Friends Service Committee for working with our office on a lot of the legal issues here, including the fact that we have now uncovered the fact that the San Francisco Police Department did not post this policy as they were required to do under state law 30 days before the hearing yet another reason that this board of supervisors should change course, and send this issue back to committee instead of adopting it on final reading tomorrow.

So I will just say this, and then I will close out, because I know we have a lot of speakers. But there is no way. There is no way that I am going to sit by silently and allow a policy as dangerous and reckless as this to be adopted and go into effect in the city and county of San Francisco, we will fight this legislatively at the board. We will fight this in the streets and on public opinion. And if necessary, we’ll fight this at the ballot. So thank you all for your tireless advocacy and for being here.

03:05 – Melissa Hernandez

Thank you so much to supervisor Dean Preston. I’d like to call on supervisor Hillary Ronan.

03:15 – Hillary Ronan

Thank you, and thank you, everyone for being here today. A couple of things, because there wasn’t any notice that this extremely dangerous policy was going to be thrust upon us last minute. We still have hundreds of unanswered questions about the use of killer robots. Number one, have we had a deep conversation about the ethics of machine on the streets of San Francisco being armed and ready to kill human beings? If we have I haven’t heard it. Number two. What are these robots? Were they designed to carry arms? We’ve been using them for quite some time. We’ve been using them for reconnaissance.

We’ve been using them to remove dangerous materials from places. But are they designed to carry and detonate dangerous weapons? We don’t know. We simply don’t know. And then finally, what training have the SFPD had to use these killer robots? Nobody’s talked about this basic question. So aside from the countless literally countless issues that this community has already brought up, we don’t even have basic information to make this decision. What we do know is that the robotics industry itself is very skeptical and the majority of which are against the use of robots, that to be armed and to kill human beings. This is not a policy that should ever go forward. But at the very least, there are hundreds of unanswered questions that we haven’t even begun to explore at the Board of Supervisors. And until we do this ridiculous policy should not go forward.

05:18 – Melissa Hernandez

Thank you so much supervisor Ronan. Now we will hear from Board President Shamann Walton.

05:26 – Shamann Walton

Good morning. Good morning. Good morning. Not Not only is it ludicrous to militarize a local police department that was given 272 recommendations from the Department of Justice, to reform make changes, to learn how to de escalate to do everything they can to come up with the ability to not use lethal force. So the fact that we would decide that now’s the time to militarize a local police department that has been under the gun, no pun intended, from the powers that push you to make better decisions. Does it make sense not only is this ludicrous because we understand it know, that when you weaponize a police force, or when you give law enforcement entities, more weapons to use in community, we know disproportionately, that those weapons will be used against people of color, black people, brown people, people in isolated and disenfranchised communities. But let’s look at the practical aspects of this.

What if a robot is hacked? What if there is a malfunction? With a robot? What if somebody managed to do harm to communities was somehow able to take over these machines? Because that’s what they are machines. Now, some of you might say, well, that’s far fetched to think about all that was far fetched to think that an alive shooting a robot can go up to a tower and save lives versus harm other lives. So I just want to say that this policy, has no place in San Francisco, it has no place. It has no place in any local law enforcement entity.

These types of machines were really designed to fight wars, and to be utilized in times where we’re not trying to use them against our residents. So I want to make sure that we do everything we can on the board of supervisors, that we do everything we can and community to fight against this policy. It’s important that we take a stand right now. Because if we don’t, with the increased surveillance, if we don’t, with the fact that killer robots are right now so far, allowed to be used in our cities, we’re going to continue to weaponize police departments versus focus on reform that we’ve all fought for for decades. So let’s join together and fight this policy and work to make the real change that we are supposed to have in our communities. Thank you.

08:24 – Melissa Hernandez

Thank you so much. To board presidential Manuel turned into our lively crowd back here. People are fired up about this. I don’t blame them. I will be welcoming our next speaker, Yoel Haile, who is the director of criminal justice programs for the ACLU of Northern California.

08:48 – Yoel Haile

Good morning, everyone. We are here today in strong opposition to arming SFPD with killer robots. Nothing is more important than stopping fascism, because Fascism is going to stop us all. FRED HAMPTON said this. For those who may not know Fred Hampton was the chairman of the Illinois chapter of the Black Panther Party. Chairman Fred was assassinated by the Chicago PD and by the FBI at age of 21. Fascism doesn’t just happen. Fascism happens little by little when we fail to hold lines that we should never cross. And this is one of those lines.

No matter what niche or rare situation that SFPD claims that they will reserve killer robots for. given them this power at all sets up a precedent, a horrible precedent that echoes beyond just San Francisco. It is the first step down the road where the state uses robots to kill its own people. And we know who will suffer most from these weapons. Remember that this is the same SFPD who killed Mario Woods, Alex, Jessica Williams, Sean Moore. kita on new Luis Gungor apart, and many others, this is the same SFPD that pulls over and kills. Black and brown people are highly discriminatory rates. The police already shoot and kill black and brown people with near impunity. Allowing them to kill remotely will lead to more mistakes.

And as we have seen with many weapons and tools many times before, that will lead to more frequent use. Remote triggers are easier to pull, and will put the lives of black and brown people in more danger and violence at the hands of the police. This is not a responsible decision. We urge the board of supervisors to change their vote tomorrow and vote no against arming SFPD with killer robots Thank you.

11:12 – Melissa Hernandez

Right, thank you. Thank you. All right, we have a lot of speakers. I’m going to try to get through the rest of our list here. Next, I would like to welcome Nicole Christen the chair of social and economic justice committee for SEIU 10.

11:38 – Nicole Christen

thank you and good morning, everyone. I she said I am Nicole Christian. I am the social and economic justice Chair of SEIU 10 to one and I am here standing with President Welton supervisor Preston and supervisor Ronan, not to ask but to demand that the rest of the board follow suit, follow their leadership follow their example. Science fiction has no place in San Francisco on this day. There is no reason that we need to have robots who can walk into a building and blow it up. I want you to think about that.

Someone from a distance or walk a robot into your neighbor’s house into your neighborhood, because someone up on high who will not be affected at all, has decided that these people should die. That is not the police department’s decision. It is not their right to make that call. I asked you to urge other people to stand with you to stand with us to stand with labor to stand with the Board of Supervisors and demand that this policy never sees the light of day. I don’t care if they gave proper notice or not. killer robots have no place in our city. We work here. We live here. We dine here we shop here. We experience the arts here.

San Francisco is an amazing city. Don’t let it succumb to ridiculous policies. Don’t let it succumb to killer robots. Stand with us. Fight with us. Raise your voice use your voice. Today is the day. Tomorrow is the day don’t let it go any further. It is up to all of us to make sure that these robots never hit the street. Not in San Francisco, not in any city. We are not going to turn San Francisco into a war zone. I will not stand by while families are displaced because of a bombing. We will not do it. I will stand with our Board of Supervisors. I will stand with you. Labor will stand with this city as we always have. And we will fight back and we will continue fighting. Don’t let this win. Don’t let this policy go through.

14:09 – Melissa Hernandez

Thank you. Thank you so much for your call. Next we have Matthew Guariglia, the surveillance policy analyst for the Electronic Frontier Foundation.

14:25 – Matthew Guariglia

everybody, my name is Matthew Guariglia. I’m a policy analyst at the Electronic Frontier Foundation. And I’m here today with a letter signed by 35 Bay Area vital community groups, racial justice groups, civil rights groups, LGBTQ groups in the labor movement. Join the groups here today up here to ask that the Board of Supervisors not approve this dangerous proposal and is not alarmist to be concerned with police deploying deadly force by a robot. We’re not here today because we’ve been duped by sensationalist headlines this was accused.

We’re here today because we know exactly what comes Next, we’re here today because we know the history. Because we read the news, we know exactly who ends up getting killed when police get access to new types of deadly force. We remember that when police said that drones or armored vehicle, or military surveillance equipment, like cell site simulators would only be used in the most extreme circumstances, until, of course, departments across the country learned that they could deploy them in increasingly casual ways. It took tear gas only about a decade, from being a universally condemned weapon of war, to breaking up protests on the streets and United States. And we won’t see that here with armed robots.

Robots are becoming more common departments across the country already have fleets of robots that are supposed to be used for bomb handling, like the ones that SFPD owns. If we pass this policy now, how long until all of them is armed as well, the world and all the rest of United States is watching what we do here today. How long until we’ve normalized police sending out robots regularly. And as we speak autonomous robots are already patrolling malls, parks and parking garages. And the United States. It’s not alarmist it’s already here. And that is why we are asking, we’re demanding that the board of supervisors do what’s right, that they not approve this here or ever, because we know what happens next. And we know how these things will be used. So we urge the board of supervisors to go back on their vote from last week and not approve this dangerous policy. Thank you very much.

16:34 – Melissa Hernandez

All right. I want to make sure that Jaffria is still here. Yes. We will now be hearing from Jafria Morris, the co founder of SF Black Wall Street.

16:48 – Jafria Morris

Hello, you’re on Black Wall Street is a coalition of black leaders coming from the southern side of the city. And we know so well, like what President was saying about the needs for police sometime in our areas. But we also need when we’re over police, the hat to have robots that kill people, we know who they will kill. But this is nothing but government execution. All you can ask every last one of those supervisors, if they believe in the death penalty, I’m sure a majority of them will say no. But when you arm lethal robots to any place to kill, you are doing capital punishment without due process. In our mind, San Francisco is no longer progressive.

We seen it through the recalls. And we see the turn of time, it is for all of us to stand up and say what is San Francisco’s true values here. And these killer robots are not. As I conclude, I want to say I heard one supervisor say, Well, this is not Robocop it’s very much Robocop you have a human brain, taking a remote control device into somewhere that the device we’re allowing the device to be the eyes of the human to determine when and when to deploy. That is Robocop Murphy had an eye. So I want you all to really believe like when we say it will be people of color. It will be misused. We just had the police kill a victim and a perpetrator Owens this year. And we want to give them the right the police chief or anyone the right to determine who gets to live and die. That cannot happen in San Francisco. Thank you.

18:34 – Melissa Hernandez

Thank you so much. Next we will be hearing from John Lindsey Poland, co director of the California Healing Justice Program of the American Friends Service Committee.

18:45 – John Lindsey Poland

Good morning. Besides the people of San Francisco, there are at least three groups of people who are watching what the Board of Supervisors does tomorrow. First, most law enforcement agencies haven’t thought much about strapping explosives to robots. The robots aren’t designed for it. And they would have to be jerry rigged, as Dallas did when they blew up someone. The SFPD hadn’t really thought about it either. The entire San Francisco PD process with militarized equipment has been improvised. The law enforcement agencies have invested heavily in robots and drones in the last 15 years. And if San Francisco authorizes illegal use of robots, you can bet that sheriff’s in the Central Valley or up north where Sheriff see themselves as autonomous authorities or even the San Francisco Sheriff itself, which has not yet submitted their policy.

We’ll also be using robots to kill people in many different types of situations. Second, the robot companies are watching because if San Francisco authorizes explosive robots, they will see a market for them all across the country. In states where use policies are required, and they feature bomb bearing attachments. Third, other online Did officials in California are watching to see what the San Francisco Board of Supervisors whether they defer to police and what the police want, or they follow the state law, which among other things, requires a 30 day period for the public to be informed. And, and, and comment as we are now, here, this did not happen.

On November 14, the San Francisco PD suddenly changed its policy removing 375 assault rifles from the policy claiming they are quote standard issue for the department. Even though the department has 2300 sworn officers. But the law to allow public comment wasn’t followed. The Board of Supervisors should send the policy back to make it transparent, take out killer robots and kick out take out the hidden assault rifles. Thank you.

20:54 – Melissa Hernandez

Thank you so much. Next, I would like to call up James Birch from the anti police terror project.

21:10 – James Birch

I’m grateful for the opportunity. Hello, everyone. My name is James Birch. I’m the policy director for the anti police terror project. I’m grateful for the opportunity to speak here. Thank you to the organizers and thank you all for being here. It’s critical to provide context for policies like this voted forward by the members of the board voted forward by many members of the Board of Supervisors. Because policies like this one do not occur in a vacuum. Robots with the ability to exercise deadly force by explosion are not authorized in a vacuum.

Let us be clear, this policy was passed because many politicians in San Francisco are clear that their police department can do what it wants. Whether it be spying on civilians without authorization, sending racist text messages or committing cold blooded murder on city streets. Just last Friday, the family and supporters of Mario Woods gathered to honor the fifth anniversary of his murder. Mr. Woods shooting was captured by multiple angles, each one showing clearly that Mario Woods was murdered. Despite the abundance of evidence, no one was held accountable. And that’s the theme when reviewing police murders in the city of San Francisco. Despite an abundance of evidence, no accountability. That’s the rats right. Thank you. That’s the truth for Amilcar Perez Lopez. That’s the truth for Alex Nieto. That’s the truth for Jessica Williams. That’s the truth for Gus Rugeley. The list goes on and on and on. We could be here all day. All of these folks were killed without accountability.

In fact, two police killings that occurred in San Francisco are currently on the desk of District Attorney Brooke Jenkins, da Jenkins. Ostensibly, it’s considering whether to move forward with the prosecutions of the police who killed Louie’s gonna go to pat and the police who killed kids O’Neill. Thank you, but those of us who follow the political movement in the city of San Francisco know that da Brooke Jenkins was not elected to hold police accountable. She was elected to remove any obstacles in the in their way. Her ascension to Office is but a piece of the larger mosaic that serves to send a message to serve to send the people of San Francisco a clear message.

This is a town where police are not held accountable by design. This permissive approach to policing the belief that if the police get what they want, they will surely follow the rules and somehow magically keep us safe is as preposterous as it sounds. We see time and again the results of this strategy. Providing carte blanche to law enforcement not only fails to improve public safety that makes our marginalized communities less safe as law enforcement does as they please.

The San Francisco Police Department’s recent history is full of examples that make clear the price the people of San Francisco are paying for this deadly strategy of no accountability. This was evidenced through the racist text messaging scandal where black and brown civilians were referred to as wild animals and worse, and had San Francisco police officers actively wishing violence upon them. This was evidenced in 2020 when the San Francisco Police Department violated policy to directly access CCTV cameras and the Union Square Business Improvement District. This is evidenced by the San Francisco department’s resistance to use of force policy changes made following the spate of police murders in 2015 and 2016, insisting on among other things permission to shoot Upon moving vehicles.

This is evidenced by the disrespectful and dismissive approach taken by Chief Bart Scott to anyone questioning his authority like he did when he was asked for Normally, by city supervisors why the clearance rates in San Francisco are so low. This is also evidenced by the fact that the city of San Francisco has still failed to address the policies or lack thereof regarding the actions of undercover officers, undercover investigators, excuse me, a scientist, San Francisco’s massage parlors. And again, in light of all of this evidence, and the context behind it, I’d like to make sure folks here in San Francisco are familiar with the murder of Joshua Pollock.

In Oakland. Joshua Pollack and enhanced man who was sleeping in Oakland, was was killed by five, San Francisco, excuse me, five Oakland Police Department officers from a tarp and armored vehicle using assault weapons. Right. These are toys that we were told, like you were told here are only going to be used under extraordinary circumstances. These are toys that we were told, as you were told here that the police department needs to effectuate its responsibility. And it’s all garbage. It’s all garbage. Right? We know how the police will use this vehicle. The gentleman from the EFF up the person from the FF excuse me, set so clearly earlier, it is black and brown communities that bear the brunt of these weapons, right these toys that law enforcement claims they need. So again, we can heat this history that I put before you, we can listen to the folks here who know who SFPD are and know what they do. Or we can meet here again when we’re doomed to repeat this history. Thank you.

26:58 – Melissa Hernandez

Everyone, we have our last two speakers. Apologies. I’m just trying to get through the rest of the speakers. So we have our last two speakers. Angela chan chief of policy for the San Francisco Public Defender will be next.

27:17 – Angela Chan

Thank you. No justice, no peace, no racist police. No killer robots. I had to get that chanted. Thank you. Good morning. My name is Angela Chan and the chief of policy the San Francisco Public Defender’s Office. Thank you to supervisor Preston, and your team, Melissa. for organizing this much needed action. Munno Raj, you are public defenders away in the national public defenders conference. He stands fully behind this rally against SFPD using these military weapons. It is impressive today the power of community, the power of community to make sure that our elected leaders and the police have to listen to us. It’s an impressive group of people who’ve turned out so quickly against this horrendous policy.

For far too long our clients and their communities at the public defender’s office have borne a large share of police violence and brutality. We oppose the expansion of the police state. We’ve closed the access to war weapons in San Francisco. We have far too many police reports where our clients have unnecessarily ended up in the hospital. And the city has had to pay out millions of dollars as a result of police violence. Yes, this department has struggled and continue to struggle with use of force against communities of color. We are not going to allow military weapons, especially killer robots in the city. That money that goes towards these weapons belongs in community hands.

We need to invest in community in addressing the root causes of violence of poverty and racism. Not in what 17 robots 12 of which work, two of which have bombs. This is ridiculous. SFPD cannot write their own rules, please should not be the business of policing themselves. Yes. They push this policy forward on vague and uncertain substantiated fears. It is absolutely unnecessary. If you heard some of the extreme hypotheticals given at the board of supervisors to justify this it is ridiculous. It’s unreasonable. It is irrational. It is merely to fan fears so that we find the police more that is the we want to do the opposite. We want to defund the police.

And that’s the public defender’s office wrote in our letter to the Board of Supervisors prior to that first about tools baked to be used, the police will figure out a way to use these weapons if we give it to them. There is no excuse for us to do this. One more thing to note is that this city, just last few years, voted down. tasers voted down proposition H. And guess who makes these killer robots? The same corporation axon, the same corporation. We are not giving any more San Francisco tax dollars and taxpayers already have spoken about this. We are not arming the city more the police more to kill black and brown people in San Francisco. Thank you. And last just want to end by making it really clear. The Board of Supervisors has a choice. There is a second vote on this, I believe tomorrow. And it doesn’t have to be the same as the first completely illegitimate, unnoticed Vote. Vote no on killer robots.

31:03 – Melissa Hernandez

Thank you so much. I would now like to welcome Sharif is the coot the movement coordinator and organizer for the Arab resource and organizing center.

31:16 – Sharif Zakout

Good morning. My name is Sharif Zakout, and I’m a resident of San Francisco and a leader with the Arab resource and organizing center. As an organization that has served poor and working class Arab and Muslim Muslims in San Francisco for the last 15 years, who’ve been historically targeted by SF surveillance and policing policies. We are outraged that instead of leading the way in progressive policies to ensure the safety and security of all communities, San Francisco has made the reckless decision of setting a dangerous precedent for the Bay Area and for the rest of the country.

This follows a dangerous pattern of using militarization as a solution to everyday problems, extensive community feedback, data and research has already disproved this logic. Arming officers with killer robots does nothing to advance the overall well being of San Franciscans. Instead, it exacerbates increasing social inequities, including the disproportionate targeting of black and brown, poor and working class communities by police. We want to see the leadership from our supervisors and advancing the quality of life here in the city. We do not want the use of dangerous technology. We do not want to expand militarism in our communities, and we are not enemy combatants to wage war upon. This decision is offensive and dangerous to us as SF voters and as shameful display of how San Francisco is veering away from being a progressive city, and is now indistinguishable from programs of the right wing. We cannot claim to be progressive or a sanctuary city and militarized our way out of social problems.

We do not want San Francisco to take a step backwards at a time when we claim to be committed to progress and racial justice and human rights. We applaud the bold action of supervisors, Ronan, Walton and Preston and we urge other supervisors to reflect on the city that you are responsible for shaping and the implications of such an irresponsible decision. Do we want San Francisco to be known for killer robots? Or do we want to build on our history of progressive values and be known for us be known as a city that centers safety and the well being of all? We urge supervisors suffi Chan, Stefan Mr. Dorsey mandelman, Mel and Mel guard to reverse your decision and stand on the right side of history. Thank you very much.

33:46 Melissa Hernandez

(No Killer robots!) Thank you so much to everyone who has been here. We are going to go ahead and take some questions from the press if there are any. Yes, go ahead.

34:02

The second that’s happened. overturned last week’s reading what would happen will be the consequences of that either way.

34:15 – Dean Preston

Thank you. And the question was immediate question was if the vote were overturned tomorrow, what would be the consequence of that? Or if it were passed, so let me get into the weeds just briefly the way this works. Any ordinance in the city and county of San Francisco has two readings. Its read on first reading that’s what happened last week. And then typically the second reading is almost more of a formality. 99% of the time, the supervisors will who voted one way on the first reading will vote the same way.

Every once in a while there is an issue where based on public input or further reflection folks approach it differently on the second reading it is a new To vote. And there’s absolutely nothing about the fact that a passed a three last week that requires any of those supervisors to vote the same way tomorrow. So we’re hopeful that, that this discussion, this public discussion will cause some of my colleagues who voted for this to change their minds. There are a number of options of what could happen tomorrow, the Board of Supervisors could adopt the policy.

And then 30 days after that, it would take effect, and it would become the law in San Francisco, the Board of Supervisors could reject the policy and vote against it, and then it would be dead, or the Board of Supervisors could send the policy back to the committee where it was previously heard. Because as some speakers have noted, there was not it basically, the public was supposed to get 30 days before the committee hearing to give input on the policy. The policy was posted on SF PDS website on a Friday for a hearing the following Monday. So the so the part of the reason, and you’ll notice a lot of people called into the hearing last week, and the clerk had to say I’m sorry, you can’t give public comment on this. And the reason is, because that public comment supposed to happen in committees. But it didn’t happen here, because the policy wasn’t put out publicly like it’s required to be under state law. So I think so we are asking the Board of Supervisors tomorrow to get at least a majority to agree to send this back to committee. So the public can have input, and then hopefully it will will not able die, there would be my preference. A follow up? Yes.

36:48

The issue you’ve been paying came to be? It’s my understanding that it was a California law that took effect recently that has kind of law enforcement do an inventory and define the boundaries of the military.

37:06

The follow up question is, why is this even before us? Her understanding that there’s a state law that required this, and we have a number of speakers who have far more expertise in the state law. So I’ll let any of the our speakers who want to respond, supplement what I’m about to say, but that is correct. There’s a state law that was passed our city attorney when he was Assemblymember, David Chu was the author of that law. And it requires for this military equipment that comes from the military, nationally to local police departments, that there be an accounting of what equipment they have, and the policies that govern the use that policy from SFPD was brought to the Board of Supervisors and now comes to us before this state law, these things didn’t even come to the board of supervisors or local city councils.

I want to highlight that, like other cities have looked at this and other states have looked at these issues and rejected this kind of technology. And just across the bay. We had a speaker from anti police terror project here, who does a lot of work in Oakland. And I just want to highlight the Oakland City Council rejected exactly this recently. And San Francisco should do the same. I don’t know if any other speakers want to address the state law question that. Yeah.

38:26 – John Lindsay Poland

My name is John Lindsay Poland and with the American Friends Service Committee. The state law is AB 41, which requires every city and county and state law enforcement agency in the state to submit Yes, an inventory of all types of militarized equipment, not only equipment that comes from the Pentagon, but the equipment that gets purchased or gotten a grant that includes the assault rifles and includes armored vehicles, as James talked about it includes less lethal is like tear gas and impact projectiles. It includes robots, it includes drones, and there is no other city or county in the state. We have been reviewing these policies.

There’s no other jurisdiction in the state that has authorized the lethal use of robots. As he said in Oakland, there was a proposal by the department to allow robots to be used lethal shotguns against people and it was protested and pulled back. So it does this law does require reporting on all of these things. This is why we’re concerned that the department withdrew 375 assault rifles that it has in its arsenal, but it are now invisible to policy and to reporting. There are many other questions that as supervisor Ronan said about this policy, they’re there. For example, they’re not even the number of robots in the SF PDS Arsenal are listed.

So you don’t know what how many of which model it possesses, and therefore how many of them might be converted in some improvised way. A into killer robots. There are many problems. They’re all part of that context, the James bridge talks about, of militarized policing that is going after the people who are always targeted by this type of policing. It’s just now more militarized.

40:18

Right? Go ahead. Yeah. Supervisor Preston, you were talking about a possible ballot measure. I was wondering if you could elaborate on that a little bit and what that might look like. And when we could see that possibly, you know, that that effort come to be the question was,

40:36 – Dean Preston

there was reference to of possible ballot measure, what might that look like when we when we would see that? Let me say this, the people of San Francisco ultimately have decision making power over these kinds of issues, if it comes to that. But I want to take this one step at a time, we should not have to go to the ballot and expend the time and the resources to reject killer robots in San Francisco. I mean, I can’t believe I even have to say it.

But but but I will say this, there will be a vote tomorrow. I sincerely hope my colleagues change their vote from last week. And that we make this the issue of a ballot measure mood. But certainly if this board of supervisors adopts a policy that gives the green light to the San Francisco Police Department, to use robots to kill people, I would certainly hope that there will be a very active conversation with my office and advocates about the possibility of overturning that kind of decision at the ballot. But let’s hope we don’t get there.

41:58 – Melissa Hernandez

You just to answer the question, you can show up tomorrow, they will not be taking public comment on this. As far as any media questions, are there any more? Yes.

42:13

Any idea? Where you?

42:26 – Dean Preston

Sorry, the question was any idea what the vote will be tomorrow? So the answer that is no, we don’t know what the vote will be tomorrow. And one of the things we’ve been encouraging folks to do is let our colleagues on the board know what you think of their vote whether you liked it or didn’t like it. I will say this about last week’s vote. So it was an eight three vote. So some people might look at that and say, Oh, it’s a done deal, right. It’s not a situation like six, five or something. But I just want to note that three of the people who voted for the this policy expressed very serious concerns at the hearing. One of them expressed what sounded like opposition to allowing killer robots in San Francisco to Others expressed a desire for this to go back to committee.

Now they ended up voting for the policy last week. But I certainly think there is a very realistic possibility that they view it differently tomorrow. And and you know, I’ve worked on a lot of issues on the San Francisco Board of Supervisors, I cannot think of an issue that I have worked on where there has been such unanimity among the all of the people providing input not just to me, but to all members of Board of Supervisors condemning this and demanding that we do not arm robots to kill in San Francisco. So I think that this is an unusual situation where it is my my hope, and I’m cautiously optimistic that one or more supervisors will change their vote tomorrow, and at minimum send this back to committee.

44:11

All right, thank you so much. I think I’m going to go ahead and close this out and press have any further questions. We can take them over here. Thank you.

Come to the Rally and Press Conference Monday Dec 5th 9:30 am and Demand the SF Board of Supervisors Vote NO on “Killer Robots”

Update: December 5: Transcription and Audio Video Downloads here: https://www.aaronswartzday.org/no-killer-robots-press-conference-december-5-2022/

***

December 4, 2021

Contact: Tracy Rosenberg, Co-Founder – Aaron Swartz Day Police Surveillance Project

email: tracy@media-alliance.org

phone: 510-684-6853

When: 9:30 am

Where: City Hall, Polk Street Steps (Civic Center Park Facing- Steps)

What: Rally and Press Conference organized by Dean Preston, District 5 Supervisor, San Francisco (Who voted “no” on this issue last week) — Also the EFF and ACLU will be there!

Why: To convince the SF Board of Supervisors to vote “No” on the final vote going on this Tuesday at 2pm or postpone the 2nd vote to a later date.

- Summary

- Exigent Circumstances

- How soon can this thing pass the Board of Supervisors? (Or “Where is the Policy Amendment exactly in its Process?)

- What language should the Policy Amendment include?

- About Dallas

Summary

This policy amendment would allow the SFPD to arm any existing robots. For instance, to take the bomb robots that they already have, that are usually used to find and dismantle bombs, and retrofit them to actually carry a bomb into a situation, such as the situation in Dallas back in 2016. (https://www.nytimes.com/2016/07/09/science/dallas-bomb-robot.html)

Police Departments can already violate their standing policies in extreme situations citing “exigent circumstances” (including an imminent threat to life), when everything else has been tried and failed. For this reason, a policy amendment isn’t actually necessary for the robots to be used in this manner in a crisis scenario, as long as the department self-reports.

The policy amendment is an attempt to make the use of lethal force by robots a “standard operating procedure” rather than something that is only allowed during the most extreme of circumstances. The policy amendment’s only requirement to use one of these armed robots is that it be authorized by a single one of the three highest commanding officers, who only needs to “evaluate” the situation prior to use.

Exigent Circumstances

Police use the “exigent circumstances” exception frequently when they want to borrow and use surveillance equipment that isn’t authorized by the local surveillance or military equipment policy for regular use. For instance, when the city of Oakland borrows drones from Alameda County, or when San Francisco borrows a cell site simulator from the Department of Homeland Security.

“Exigent circumstances” is how the cops can get around restrictions they would otherwise be bound by and when misused, can cover for First and Fourth Amendment violations. It also gives the cops the flexibility to do basically anything else they want to do, when the circumstances are extreme and abnormal. In the case of surveillance and militarized equipment (which is what the robots fall under) -an exigent circumstance clause lets the equipment be used in ways which the policy does not otherwise allow on a one-time emergency basis. (https://www.law.cornell.edu/wex/exigent_circumstances)

Here, for example are some exigent circumstance reports for drone use in Oakland when drone use was not otherwise permitted.

https://oaklandprivacy.org/wp-content/uploads/2022/12/January-2020-Exigent-Drone-Use.pdf

https://oaklandprivacy.org/wp-content/uploads/2022/12/August-2020-Exigent-Drone-Use.pdf

We only know about these, because they have to report on themselves when they use unapproved technology due to an emergency. But it is possible to allow them to do so, as long as they disclose the use and explain the “exigent circumstances” that were occurring.

But they cannot use and should not be able to use unapproved equipment or techniques without a present severe emergency and disclosure. That is the difference between exigent-use-only and standard operating procedure. The proposed policy amendment goes beyond exigent use to allow standard use whenever police commanders decide to do so and does not require prompt public disclosure after use.

.

How soon can this thing pass the Board of Supervisors? (Or “Where is the Policy Amendment exactly in its Process?)

The “first reading” of the ordinance was already voted on and passed 8-3, but several of the “Yes” votes were clearly uncomfortable with the situation. (We’re not sure why they voted “yes” anyway…)

The next vote is this TUESDAY DECEMBER 6 at 2 PM.

The amended ordinance with the killer robot permission will become law after the second reading, so if we can’t convince some of the supervisors to change their stance in the next few days, San Francisco will become the first city in the country to explicitly authorize deadly force by robots.

What language should the Policy Amendment include?

Well, it should say that robots cannot be armed under any circumstances.

However, since we may need to just slow down a runaway train, we wish to make it clear what any Amendment would need to include.

The policy should say it would only be used:

1)under threat of imminent and significant casualties or severe physical injury, and

2)only after de-escalation efforts and alternative use of force techniques have been tried and failed to subdue the subject, and

3)where there would be no collateral loss of life whatsoever, including bystanders and hostages.

These minimum requirements are humane in a way that hopefully does not need justification or debate.

About Dallas

In July of 2016 in Dallas, a suspect had shot and injured a number of policemen and barricaded himself in a building. The Dallas Police Department armed an existing bomb sniffing robot and send it into the building to blow it up and kill the suspect. The Dallas Police Department was extremely lucky that there was no collateral damage. The suspect was killed.

It is the only known use in the United States of a robot with a bomb being used by civilian law enforcement. In 1985, the Philadelphia Police Department delivered explosive bombs via helicopter when they bombed the headquarters of the MOVE black liberation group. The MOVE bombing killed at least 5 children, burned down over 60 residences and is generally seen as egregious police violence.

The Dallas incident is often used to justify why it might be necessary to arm a robot with bombs, but if we examine it closely, it does quite the opposite.

Considering it was one guy, locked inside a building, alone, with little or no chance of being able to hurt anyone else unless they were to force their way inside the building, it’s pretty easy to say that the Dallas PD jumped the gun. DPD had lots of other options short of blowing up the building. For instance, they could have waited hours or days for the guy to eventually come out, and stayed far enough away so he couldn’t shoot them.

With all this in mind, there is no reason for San Francisco to establish the blueprint for regularized use of killer robots with few restrictions besides an evaluation by senior police command.

Aaron Would Have Been 35 Years Old Today

It has been eight years since Aaron’s death, on January 11, 2013.

We miss you Aaron.

November 8, 2021 would have been Aaron’s 35th birthday, but instead we mourn our friend and wonder what could have been, had he not taken his own life seven years ago after being terrorized by a career-driven prosecutor and U.S. Attorney who decided to just make shit up, make an example out of Aaron, impress their bosses and further their own careers.

As it turns out though, Aaron’s downloading wasn’t even illegal, as he was a Harvard Ethics Fellow at the time and Harvard and MIT had contractual agreements allowing Aaron to access those materials en masse.

But all this didn’t come to light until it was too late.

Aaron was careful not to tell his friends too much about his case for fear he would involve them in the quagmire. In truth, we wouldn’t have minded doing anything we could to help him, but we didn’t realize he needed help, and that his grand jury’s runaway train had gone so far off the rails.

We should have known though, as Grand Juries are a dangerous, outdated practice that give prosecutors unlimited power, making it easy to manipulate the way that witnesses and evidence are presented to the Grand Jury and convince jurors of almost anything. These kinds of proceedings also often violate the subject and witness’ constitutional rights in different ways. For these reasons, most civilized countries have transitioned away from them in favor of preliminary hearings.

We learned many other lessons from his case, after the smoke had cleared. We learned that Aaron’s Grand Jury prosecutor, Assistant U.S. Attorney Stephen Heymann, and the U.S. Attorney in charge of his case, Carmen Ortiz, were so obsessed with trying to make names for themselves, they were willing to fabricate charges and evidence in order get indictments that would otherwise be unachievable.

As Dan Purcell explained:

“Steve Heymann did what bureaucrats and functionaries often choose to do. He wanted make a big case to justify his existence and justify his budget. The casualties be damned…

Our bottom line was going to be that Aaron had done only what MIT permitted him to do. He hadn’t gained unauthorized access to anything. He had gained access to JSTOR with full authorization from MIT. Just like anyone in the jury pool, anyone reading Boing Boing, or anyone in the country could have done.

We hoped that the jury would understand that and would acquit Aaron, and it quickly became obvious to us that there really wasn’t going to be opportunity to resolve the case short of trial because Steve Heymann was unreasonable.”

We also learned that MIT was more concerned with their own reputation than standing up for the truth or protecting Aaron. In fact, we learned that MIT decided to assist the government with its case against Aaron, rather than helping him by pressuring to Feds to drop the case, even after JSTOR had made it clear it did not wish to prosecute.

We know all this because Kevin Poulsen explained to us how he had to sue the Department of Homeland Security to get access to documents in Aaron’s FBI file, and that MIT blocked their release – intervening as a third party – and demanding to get a chance to further redact them before they were released to Kevin – and the Judge granted their request! Only time will tell what MIT was so worried about, but its behavior suggests that there may have been some kind of cover-up regarding its involvement in Aaron’s case.

Most recently, thanks to Property of the People’s Ryan Shapiro, we learned that Aaron had an erroneous code in his FBI record that meant “International Terrorism involving Al Qaeda” – deriving from his sending a single email to the University of Pittsburg, which might explain why the FBI was so suspicious of him during his case.

There are still many pieces of the puzzle missing, but we won’t stop trying to put it all together. We hope you will join us on November 13th to honor him and learn about his projects and ideas that are still bearing fruit to this day, such as SecureDrop, Open Library, and the Aaron Swartz Day Police Surveillance Project.

Until then, we will continue to come together to help each other and share information, knowledge and resources, and to try to make things better in our world.

Howl For Aaron Swartz (by Brewster Kahle)

Howl for Aaron Swartz

Written by Brewster Kahle, shortly after Aaron’s Death, on January 11, 2013.

Howl for Aaron Swartz

New ways to create culture

Smashed by lawsuits and bullying

Laws that paint most of us criminal

Inspiring young leaders

Sharing everything

Living open source lives

Inspiring communities selflessly

Organizing, preserving

Sharing, promoting

Then crushed by government

Crushed by politicians, for a modest fee

Crushed by corporate spreadsheet outsourced business development

New ways

New communities

Then infiltrated, baited

Set-up, arrested

Celebrating public spaces

Learning, trying, exploring

Targeted by corporate security snipers

Ending up in databases

Ending up in prison

Traps set by those that promised change

Surveillance, wide-eyes, watching everyone now

Government surveillance that cannot be discussed or questioned

Corporate surveillance that is accepted with a click

Terrorists here, Terrorists there

More guns in schools to promote more guns, business

Rendition, torture

Manning, solitary, power

Open minds

Open source

Open eyes

Open society

Public access to the public domain

Now closed out of our devices

Closed out of owning books

Hands off

Do not open

Criminal prosecution

Traps designed by the silicon wizards

With remarkable abilities to self-justify

Traps sprung by a generation

That vowed not to repeat

COINTELPRO and dirty tricks and Democratic National Conventions

Government-produced malware so sophisticated

That career engineers go home each night thinking what?

Saying what to their families and friends?

Debt for school

Debt for houses

Debt for life

Credit scores, treadmills, with chains

Inspiring and optimistic explorers navigating a sea of traps set by us

I see traps ensnare our inspiring generation

Leaders and discoverers finding new ways and getting crushed for it

Brewster Kahle: Plea Bargaining and Torture

Audio Clip:

Link to video of Brewster’s talk (Direct link to Brewster’s talk from within the complete video of all speakers from the event.)

The transcript below has been edited slightly for readability.

Complete transcription:

Welcome to the Internet Archive. I’m Brewster Kahle, Founder and Digital Librarian here, and welcome to our home.

For those that haven’t been here before… The little blinking lights on the 5 petabytes of servers that are in the back, are actually serving millions of people a day, and being kind of a digital library. The little sculptures around are people who have worked at the Internet Archive, including one of Aaron Swartz up toward the front. In the front because he was the architect and lead builder of OpenLibrary.org, which is an Internet Archive site. And also worked on putting Pacer into the Internet Archive (RECAP), Google Books public domain books, and other projects that we’ve worked on over the years. So with this, we’d like to say, “Happy Birthday Aaron, we miss you.”

I’m going to talk about a cheery subject: Plea Bargaining and Torture. When I was trying to think through the approach that was used to bring down Aaron Swartz and to try to make a symbol out of him, I typed these words into my favorite search engine (“Plea Bargaining and Torture) and back came a paper on the subject, that I am going to summarize and also elaborate on.

I found this wonderful paper, by a Yale Law Professor, in 1978, comparing European Torture Law and current Plea Bargaining. This might sound a little bit far fetched, but stick with me for a minute.

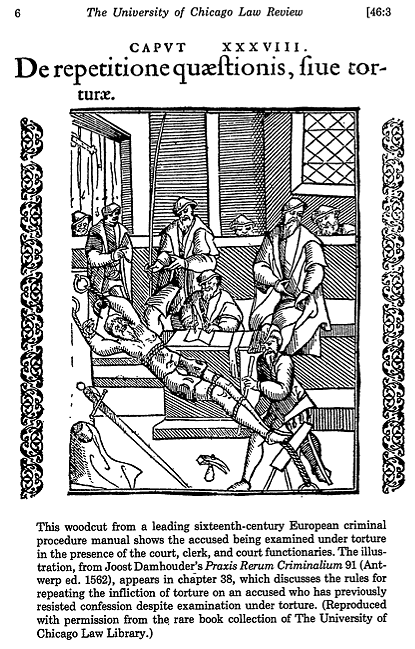

European Torture Law, I had no idea, was actually a regulated, implemented, part of their court system. It started in 1215, when they stopped going and saying “you’re guilty because God said so.” They had to come up with something else. So they basically had to come up with something that was *that sure.* And they said you either had to have two eyewitnesses, or, you had to confess. And this was actually an unworkable system. And instead of changing that, they tried to force confessions, and they had a whole system for how to do it. They had basically how much regulation, how much leg clamping you had. How many minutes of this, for different crimes.

So you can see in this diagram, and you can see this guy getting tortured here, but he is surrounded by court clerks. So, it’s not this, sort of, the Spanish Inquisition, as Monty Python would have it. This was actually a smart people state-sponsored system that was trying to fix a bug in their court system, in that it was too hard to convict people. So they tortured them into confessions.

Sound familiar?

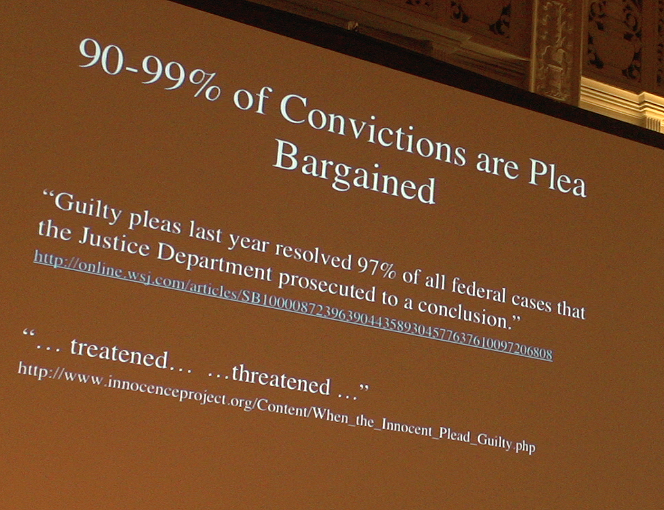

So, in the United States, now, we have between 90 and 99 percent. It depends whether you are in Federal or State court, or which county you’re in. 97% of all convictions at the Federal level are done with plea bargaining.

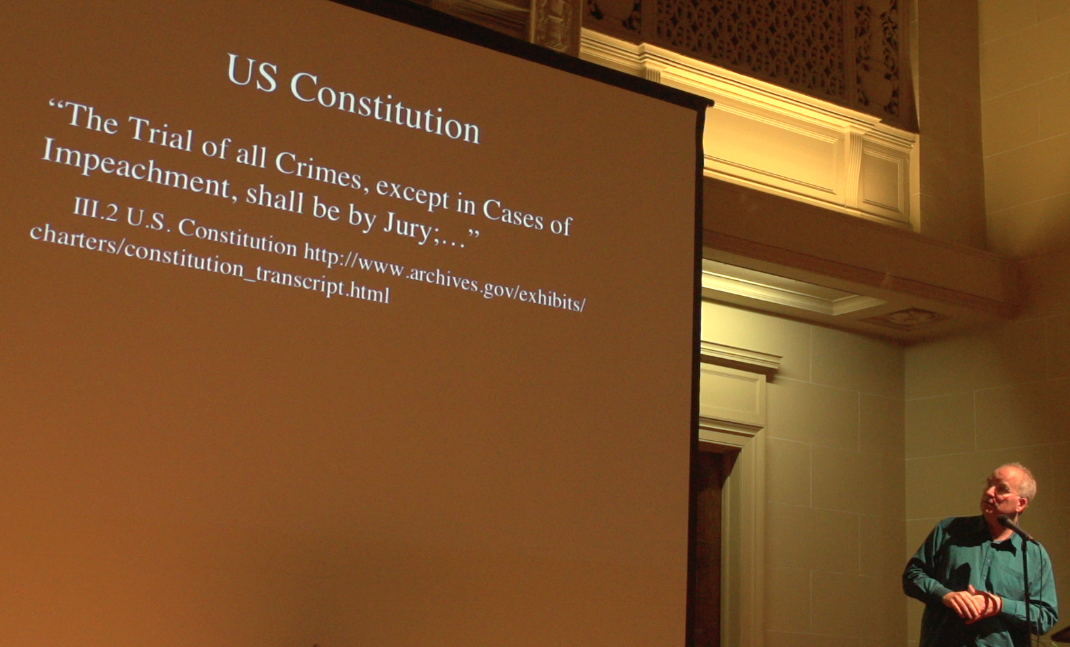

So you have basically no chance of having a jury before your peers. This is basically a threat system. They actually did studies in Florida where they jacked up the sentences, and the number of people that plea bargained went up. It’s a system to handle convictions outside of the Court System. Outside of the Jury System. Unfortunately, our Constitution actually has something to say about this that’s in pretty direct contradiction:

“The Trial of all Crimes, except in Cases of Impeachment, shall be by Jury;…”

– Article III.2 U.S. Constitution (http://www.archives.gov/exhibits/charters/constitution_transcript.html)

But as another thinker on this has said, basically Plea Bargains have made jury trials obsolete.

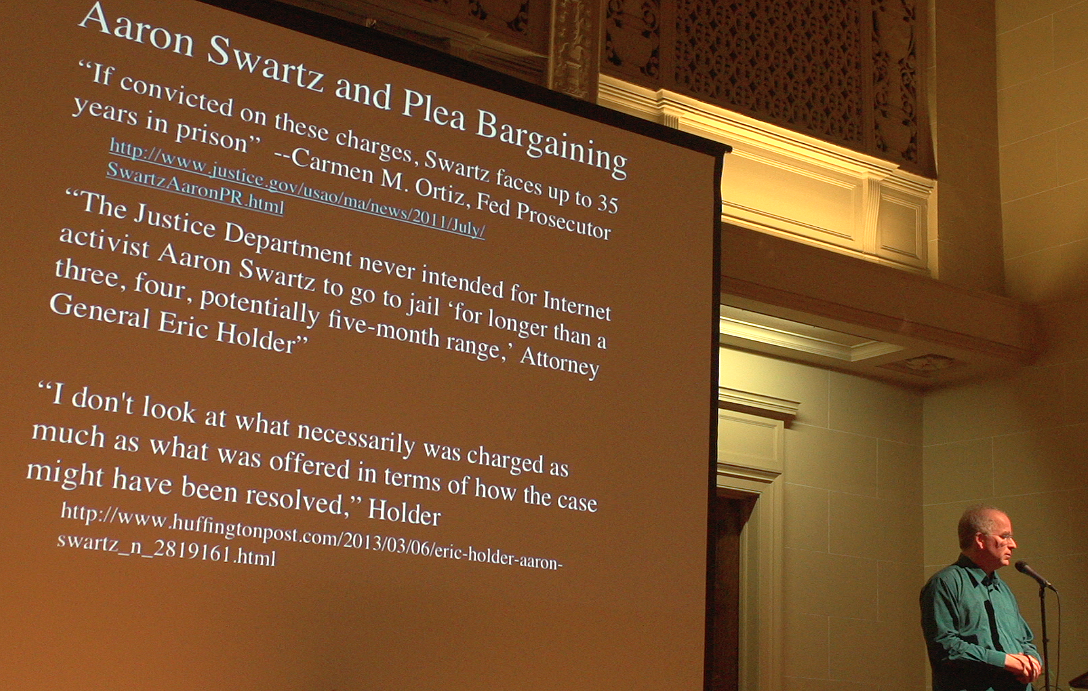

When Aaron Swartz was threatened with 35 years, it’s got to have hit a young, idealistic person pretty hard. 35 years for downloading books too fast from the library? This doesn’t make any sense. Yet that’s a pretty big threat, and may have had something to do with it. When this sort of played out, after his death, I just found that these quotations notable enough that I’m going to sort of, bore you, with putting them up.

So he was faced with 35 years, thanks to Carmen Ortiz. Wonderful. And the Justice Department had never intended for this. No more than a three, four, or potentially five-month range,” said the top attorney in the United States. And we shouldn’t really judge what the prosecutors were doing, based on what they threatened him (with), just by what they were going to do if he pled guilty.

So I think we’ve got a real problem with this. So what’s to do?

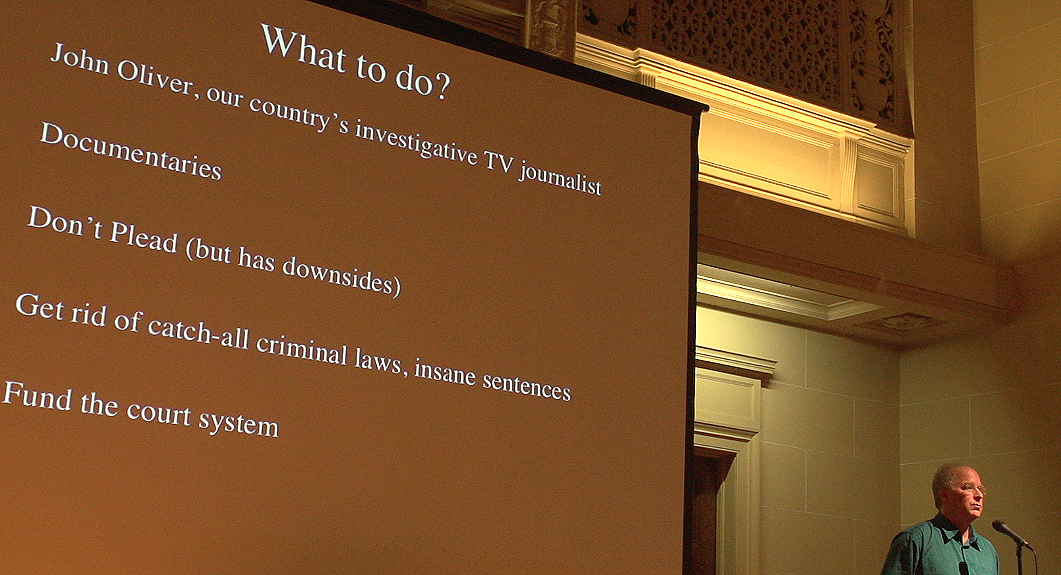

Well, I say we should make some noise about it. I think some of the reasons that we don’t make noise about it is it doesn’t happen to our friends. This sort of thing happens to a lot of “other people.” But, in this case, it did happen to our friend, and I think that it’s important for us to respond to it.

I think John Oliver has been on a roll, in terms of some of these unbelievable sorts of diatribes of going and actually doing research and bringing it in front of people in an interesting way. I’d also like to pitch: “is there a documentarian in the house, say?” That we should go, and really go and put this type of behavior in front of more people.

There are others that are trying by not pleading, but it has its downsides. Basically, gum up the courts. At least for me, I take off my… I don’t go through the surveillance device in the airports, and yes it gums them up a little bit, and I feel like that’s my part to help. Would I actually, if it came right down to it, not plead? To help move this forward? I don’t know. By enlarge, we’ve got ridiculous catch-all laws, and we’ve got sentences that are just outrageous, and these have just got to come under control, as well as let’s actually hire some judges.

Chelsea Manning’s Op-Ed for the NY Times: The Dystopia We Signed Up For

From September 13, 2017

By Chelsea Manning

In recent years our military, law enforcement and intelligence agencies have merged in unexpected ways. They harvest more data than they can possibly manage, and wade through the quantifiable world side by side in vast, usually windowless buildings called fusion centers.

Such powerful new relationships have created a foundation for, and have breathed life into, a vast police and surveillance state. Advanced algorithms have made this possible on an unprecedented level. Relatively minor infractions, or “microcrimes,” can now be policed aggressively. And with national databases shared among governments and corporations, these minor incidents can follow you forever, even if the information is incorrect or lacking context…

In literature and pop culture, concepts such as “thoughtcrime” and “precrime” have emerged out of dystopian fiction. They are used to restrict and punish anyone who is flagged by automated systems as a potential criminal or threat, even if a crime has yet to be committed. But this science fiction trope is quickly becoming reality. Predictive policing algorithms are already being used to create automated heat maps of future crimes, and like the “manual” policing that came before them, they overwhelmingly target poor and minority neighborhoods.

The world has become like an eerily banal dystopian novel. Things look the same on the surface, but they are not. With no apparent boundaries on how algorithms can use and abuse the data that’s being collected about us, the potential for it to control our lives is ever-growing.

*** full text below for archival purposes***

By Chelsea Manning

For seven years, I didn’t exist.

While incarcerated, I had no bank statements, no bills, no credit history. In our interconnected world of big data, I appeared to be no different than a deceased person. After I was released, that lack of information about me created a host of problems, from difficulty accessing bank accounts to trouble getting a driver’s license and renting an apartment.

In 2010, the iPhone was only three years old, and many people still didn’t see smartphones as the indispensable digital appendages they are today. Seven years later, virtually everything we do causes us to bleed digital information, putting us at the mercy of invisible algorithms that threaten to consume our freedom.

Information leakage can seem innocuous in some respects. After all, why worry when we have nothing to hide?

We file our taxes. We make phone calls. We send emails. Tax records are used to keep us honest. We agree to broadcast our location so we can check the weather on our smartphones. Records of our calls, texts and physical movements are filed away alongside our billing information. Perhaps that data is analyzed more covertly to make sure that we’re not terrorists — but only in the interest of national security, we’re assured.

Our faces and voices are recorded by surveillance cameras and other internet-connected sensors, some of which we now willingly put inside our homes. Every time we load a news article or page on a social media site, we expose ourselves to tracking code, allowing hundreds of unknown entities to monitor our shopping and online browsing habits. We agree to cryptic terms-of-service agreements that obscure the true nature and scope of these transactions.

According to a 2015 study from the Pew Research Center, 91 percent of American adults believe they’ve lost control over how their personal information is collected and used.

Just how much they’ve lost, however, is more than they likely suspect.

The real power of mass data collection lies in the hand-tailored algorithms capable of sifting, sorting and identifying patterns within the data itself. When enough information is collected over time, governments and corporations can use or abuse those patterns to predict future human behavior. Our data establishes a “pattern of life” from seemingly harmless digital residue like cellphone tower pings, credit card transactions and web browsing histories.

The consequences of our being subjected to constant algorithmic scrutiny are often unclear. For instance, artificial intelligence — Silicon Valley’s catchall term for deepthinking and deep-learning algorithms — is touted by tech companies as a path to the high-tech conveniences of the so-called internet of things. This includes digital home assistants, connected appliances and self-driving cars.

Simultaneously, algorithms are already analyzing social media habits, determining creditworthiness, deciding which job candidates get called in for an interview and judging whether criminal defendants should be released on bail. Other machine-learning systems use automated facial analysis to detect and track emotions, or claim the ability to predict whether someone will become a criminal based only on their facial features.

These systems leave no room for humanity, yet they define our daily lives. When I began rebuilding my life this summer, I painfully discovered that they have no time for people who have fallen off the grid — such nuance eludes them. I came out publicly as transgender and began hormone replacement therapy while in prison. When I was released, however, there was no quantifiable history of me existing as a trans woman. Credit and background checks automatically assumed I was committing fraud. My bank accounts were still under my old name, which legally no longer existed. For months I had to carry around a large folder containing my old ID and a copy of the court order declaring my name change. Even then, human clerks and bank tellers would sometimes see the discrepancy, shrug and say “the computer says no” while denying me access to my accounts.

Such programmatic, machine-driven thinking has become especially dangerous in the hands of governments and the police.

In recent years our military, law enforcement and intelligence agencies have merged in unexpected ways. They harvest more data than they can possibly manage, and wade through the quantifiable world side by side in vast, usually windowless buildings called fusion centers.

Such powerful new relationships have created a foundation for, and have breathed life into, a vast police and surveillance state. Advanced algorithms have made this possible on an unprecedented level. Relatively minor infractions, or “microcrimes,” can now be policed aggressively. And with national databases shared among governments and corporations, these minor incidents can follow you forever, even if the information is incorrect or lacking context.

At the same time, the United States military uses the metadata of countless communications for drone attacks, using pings emitted from cellphones to track and eliminate targets.

In literature and pop culture, concepts such as “thoughtcrime” and “precrime” have emerged out of dystopian fiction. They are used to restrict and punish anyone who is flagged by automated systems as a potential criminal or threat, even if a crime has yet to be committed. But this science fiction trope is quickly becoming reality. Predictive policing algorithms are already being used to create automated heat maps of future crimes, and like the “manual” policing that came before them, they overwhelmingly target poor and minority neighborhoods.

The world has become like an eerily banal dystopian novel. Things look the same on the surface, but they are not. With no apparent boundaries on how algorithms can use and abuse the data that’s being collected about us, the potential for it to control our lives is ever-growing.

Our drivers’ licenses, our keys, our debit and credit cards are all important parts of our lives. Even our social media accounts could soon become crucial components of being fully functional members of society. Now that we live in this world, we must figure out how to maintain our connection with society without surrendering to automated processes that we can neither see nor control.

The Intentionality of Evil

By Aaron Swartz:

Everybody thinks they’re good.

And if that’s the case, then intentionality doesn’t really matter. It’s no defense to say (to take a recently famous example) that New York bankers were just doing their jobs, convinced that they were helping the poor or something, because everybody thinks they’re just doing their jobs; Eichmann thought he was just doing his job.

Eichmann, of course, is the right example because it was Hannah Arendt’s book “Eichmann in Jerusalem: A Report on the Banality of Evil“ that is famously cited for this thesis. Eichmann, like almost all terrorists and killers, was by our standards a perfectly normal and healthy guy doing what he thought were perfectly reasonable things.

And if that normal guy could do it, so could we. And while we could argue who’s worse — them or us — it’s a pointless game since its our actions that we’re responsible for. And looking around, there’s no shortage of monstrous crimes that we’ve committed.

Complete version from Aaron’s blog, June 23, 2005:

As children we’re fed a steady diet of comic books (and now, movies based off of them) in which brave heros save the planet from evil people. It’s become practically conventional wisdom that such stories wrongly make the line between good and evil too clear — the world is more nuanced than that, we’re told — but this isn’t actually the problem with these stories. The problem is that the villains know they’re evil.

And people really grow up thinking things work this way: evil people intentionally do evil things. But this just doesn’t happen. Nobody thinks they’re doing evil — maybe because it’s just impossible to be intentionally evil, maybe because it’s easier and more effective to convince yourself you’re good — but every major villain had some justification to explain why what they were doing was good. Everybody thinks they’re good.

And if that’s the case, then intentionality doesn’t really matter. It’s no defense to say (to take a recently famous example) that New York bankers were just doing their jobs, convinced that they were helping the poor or something, because everybody thinks they’re just doing their jobs; Eichmann thought he was just doing his job.

Eichmann, of course, is the right example because it was Hannah Arendt’s book Eichmann in Jerusalem: A Report on the Banality of Evil that is famously cited for this thesis. Eichmann, like almost all terrorists and killers, was by our standards a perfectly normal and healthy guy doing what he thought were perfectly reasonable things.

And if that normal guy could do it, so could we. And while we could argue who’s worse — them or us — it’s a pointless game since its our actions that we’re responsible for. And looking around, there’s no shortage of monstrous crimes that we’ve committed.

So the next time you mention one to someone and they reply “yes, but we did with a good intent” explain to them that’s no defense; the only people who don’t are characters in comic books.

You should follow me on twitter here.

Angela Davis: This is a very exciting moment.

Video Clip here:

https://twitter.com/Channel4News/status/1270434723064696835?s=20

Full interview here: https://www.youtube.com/watch?v=i3TU3QaarQE&feature=emb_logo

Full Transcription of video clip:

Change has to come in many forms. It has to be political. It has to be economic. It has to be social.

What we are witnessing are very new demands. For who knows how long, we’ve been calling for accountability for individual police officers responsible for what amounts to lynchings. For continuing the tradition of what amounts to extra-judicial lynching, but under the cone of the law.

What we are seeing now are new demands. Demands to demilitarize the police. Demands to defund the police. Demands to dismantle the police and envision different modes of public safety.

We’re asked now to consider how we might imagine justice in the future.

This is a very exciting moment. I don’t know if we have ever experienced this kind of global challenge to racism and to the consequences of slavery and colonialism.

Supreme Court: All federal laws that prohibit discrimination on the basis of sex also outlaw discrimination based on sexual orientation or gender identity

Big decision by the Supremes today re: Title VII of civil rights act!

Here’s an op ed by Erwin Chemerinsky, Dean of the UC Berkeley School of Law: https://news.yahoo.com/op-ed-supreme-court-victory-175702939.html

From the op-ed by Erwin Chemerinsky:

The decision is hugely important in protecting gay, lesbian and transgender individuals from discrimination in workplaces across the country. But its significance is broader than that. It should be understood to say that all federal laws that prohibit discrimination on the basis of sex also outlaw discrimination based on sexual orientation or gender identity.

Here is decision itself:

https://d2qwohl8lx5mh1.cloudfront.net/8hVHe52Cq4sPdF0wEaTaCQ/content

From the Supreme Court decision itself: (page 13):

From the ordinary public meaning of the statute’s language at the time of the law’s adoption, a straightforward rule emerges: An employer violates Title VII when it intentionally fires an individual employee based in part on sex. It doesn’t matter if other factors besides the plaintiff ’s sex contributed to the decision. And it doesn’t matter if the employer treated women as a group the same when compared to men as a group. If the employer intentionally relies inpart on an individual employee’s sex when deciding to discharge the employee—put differently, if changing the employee’s sex would have yielded a different choice by the employer—a statutory violation has occurred. Title VII’s message is “simple but momentous”: An individual employee’s sex is “not relevant to the selection, evaluation, orcompensation of employees.” Price Waterhouse v. Hopkins, 490 U. S. 228, 239 (1989) (plurality opinion).

The statute’s message for our cases is equally simple and momentous: An individual’s homosexuality or transgenderstatus is not relevant to employment decisions. That’s because it is impossible to discriminate against a person for being homosexual or transgender without discriminating against that individual based on sex.

I genuinely think this is a full win. We fully won. The HHS rule is completely void.

— Chase Strangio (@chasestrangio) June 15, 2020